Challenges in Enabling the Future of AR and the Metaverse

The Metaverse is Here

“The Metaverse is here” – a phrase that sends a beautiful combination of excitement and eye-rolling across any crowd. Although an overhyped term in recent years, the concept of bringing people together by linking the digital and physical worlds is nothing new and has served as the key driving force of technological innovation over the last 50+ years. From TVs and computers to smartphones and wearables, these electronic devices have allowed humanity access to information and connectivity with each other like never before.

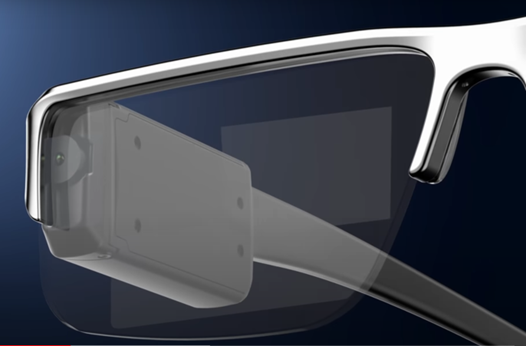

So, while many people agree that we are using some primitive form of the Metaverse already, sci‑fi images of glasses and screens displaying real time information and offering control of the digital world with simple eye, hand gestures or even mere thought are what we envision to be the future of human-digital interaction. Many companies have been focusing on this new paradigm, creating software and user interfaces that delineate a clear and significant shift from the present-day application-based interfaces we are used to.

As an example, take checking the weather. Currently, under the application-based interface, this requires the user to (1) open an application (usually on a smartphone), (2) wait for the information to load and update, and (3) view the displayed information to be apprised of the weather. Alternatively, imagine if we could go straight to step 3 and the weather was already overlayed on a convenient physical location, like the front door of your home. From the user perspective, the weather would always be there, displayed on the door, even when not in the user’s field of view – a clear elevation in user experience. But it takes a considerable amount of work “behind-the-scenes” to make this happen: the headset needs to identify when the user’s front door is in view, render an intuitive-looking interface that has the information updated in real time, and load this quickly and seamlessly all before the user even realizes what had to happen.

While software has and will continue to progress to satisfy our desire to access large amounts of contextually-relevant information instantly and conveniently, the hardware has not kept pace, limitedby requirements in size, power efficiency and performance to support thesoftware, all to be delivered at consumer-friendly price points. In fact, withevery passing software advancement, requirements on computation power anddisplay systems become even more stringent.

Today's Hardware

This explains the expensive, heavy and bulky MR / VR headsets that are in the market today. Software advancements in 3D rendering, real time image analysis and AI have been monumental in enabling AR experiences that improve the way we communicate and consume information. But the computational intensity required for real time image analysis and 3D rendering limits the usefulness of these advancements. Take the recently announced Apple Vision Pro as an example: the headset, while impressive in capability, is not a device you can imagine grabbing on the way out the door. In its present form, it is far more suited for content consumption at home, replacing a TV or tablet, than for providing a true experiential augmentation of real life which AR glasses have long promised. Still, even with its bulky form factor, the Apple Vision Pro clearly pushes the boundaries of current hardware possibilities. But it does so at a steep premium price tag beyond what a typical consumer would be willing to bear, and hence is not feasible for widespread adoption at the current time.

On the other side, companies prioritizing form factor such as Xreal with its small and lightweight Air AR glasses offer products with limited functionality and dimmer-than-ideal displays. Often, these have the functionality of a monitor linked to your phone that you can wear as glasses with no real-time augmentation of daily life (due to the lack of sensors and real-time data processing). Even with the limited functionality, and the need for a cable to connect to your phone (due to lack of native wireless capability), most of these glasses are still too bulky to be used in daily life.

Addressing Hardware Challenges in Metaverse Adoption

So there are two key areas where the present state-of-the-art solutions fall short in order to enable the next stage of Metaverse adoption: (1) high-quality display technology that provides for augmented and immersive environments; and (2) the size, weight and power consumption metrics of these devices. For the sake of this discussion, we can consider the end goal to be a glasses formfactor device loaded with smart technology (sensors, eye tracking, real-time image analysis, etc.) to enable easy and mass adoption.

Display Technology

On the challenges behind an augmented and immersive display technology: the desire to have intuitive user interfaces and an immersive experience necessitates high brightness and wide FOV. These AR projection displays are usually made from 2 components: the display engine and the waveguide/optical system. The display/projector needs to be small (< 1 cm3), bright (1 million nits), power efficient (<5 mW), while capable of HD resolution (1080p+). As a combination, these requirements lay down a monumental technical gauntlet that can only be achieved by a few display technologies, which is a separate discussion by itself. The general consensus is that microdisplays based on inorganic MicroLEDs are the most promising option for bright, power efficient, and high-resolution display engines.

The good news is that early prototypes are not as far off as many people fear. These AR displays will undergo similar technical development pathways as other display-centric devices (TVs, smartphones, smartwatches /wearables) whereby resolution and brightness specifications start modest and improve from generation to generation. For example, VGA to 720p display engines are sufficient to enable early levels of augmented reality, but immersive video experiences will be limited at first. This is due to challenges in the manufacturing of high pixel density display engines. The manufacturing of such microdisplays typically relies on costly and low yield advanced packaging solutions that will limit adoption in the near term.

nsc’s PixelatedLightEngine™(PLE) technology is ideally suited for microdisplays. This PLE technology combines GaN LEDs and Silicon CMOS transistors at wafer scale while obviating the need for advanced packaging solutions. Additionally, the chip-oriented manufacturing techniques that are used leverage the benefits and learnings of the CMOS manufacturing industry to improve yield and scalability of microdisplays to levels that will ultimately result in device price points that are amenable to consumer products.

Wireless Technology

Because of the aforementioned software advancements, the expected computational load of an AR headset has gone from a simple display of search results or today’s date (see: Google Glass 2013) to processing real time analysis of the user’s surroundings, gestures, and biological signals while at the same time rendering immersive high resolution video content. Long story short: computation power of these tiny light weight headsets headsets needs to be that of at least your cell phone, if not something closer to a high-end desktop PC. Fitting that much compute power in something the size of a pair of glasses just isn’t possible anytime soon(even if Moore’s Law keeps chugging along). So, instead of doing the compute at the glasses, we can use wireless communication technology to offload the computationally intensive tasks to cloud servers. This is similar to what NVIDIA is doing quite successfully now with its “GeForce NOW” service that allows even weak and old PCs to play modern AAA games by doing the computation in the cloud and streaming the results to the PC. However, this only works with an incredibly low latency and high bandwidth internet connection, which is hard to do on the move: it is not such a challenge at home with wifi, but with current 4G / early 5G cellular infrastructure, the bandwidth to support this quantity of real time cloud connectivity outside the home is not there. In addition, the present 5G wireless technologies that do support these high data rates consume huge amounts of power, resulting in the need for larger battery packs and – you guessed it – a bulkier device.

nsc’s CMOS + GaN HEMT wireless platform can enable higher speeds for future 5Gand 6G applications providing the promise of moving computationally intensive tasks off the headset and onto the cloud. However, the power consumption of competitor 5G+ wireless communication systems are too great for a small and lightweight headset. nsc’s CMOS+GaN HEMT wireless platform promises to reduce power consumption by enabling system level optimizations in the wireless signal chain that improve the efficiency of power saving operation modes and reduce peak power using high-efficiency power amplifiers. While often overlooked, wireless technologies are a critical linchpin towards enabling highly immersive AR headsets with the level of functionality that is imagined by today’s software developers.

nsc Enables Metaverse Hardware

It is clear that advancements in display and wireless connectivity technologies will be key to enabling the future of AR headsets and the Metaverse in general. Whether it is nsc’s CMOS + GaN PLE or wireless solution, our platform will enable the critical chip solutions for Metaverse hardware of the future. Without advancements in these areas, consumers will miss out on the exciting experiences that the Metaverse, and AR glasses specifically, promise to provide.

If you have any questions or would like to learn more about how nsc is paving the way for the future of the Metaverse, please contact us at contact@nscinnovation.com.